Introduction #

Introduction: The Paradigm Shift to AI Supercomputing

The rapid ascent of Generative AI has fundamentally altered not just user interfaces, but the physical reality of the cloud. For the past decade, enterprise software engineering prioritized microservices—decoupling applications into independent, portable components. However, training frontier AI models demands the inverse. It requires massive, monolithic synchronization where hundreds of thousands of processors function as a single, cohesive unit. We are witnessing a transition from the traditional data center model to what Microsoft Azure defines as the "AI Superfactory."

This shift represents the pinnacle of Legacy Modernization, redefining the core principles of modern Cloud Architecture. Azure has evolved beyond the concept of isolated server farms to a design where global infrastructure functions as a fungible pool of compute. By pushing the boundaries of physics—managing latency, heat, and data transmission speeds—Azure has engineered an infrastructure capable of near-infinite scale. This is not merely about adding capacity; it is an architectural revolution. It requires the tight integration of custom silicon, such as the Maia 100, with novel network topologies and automated orchestration to treat geographically distributed hardware as a unified, fluid resource.

For business owners and CTOs, this evolution presents a critical inflection point: Is your current infrastructure an accelerator for innovation, or is it accumulating technical debt? While few enterprises need to train trillion-parameter models, the principles driving the AI Superfactory—resilience, extreme automation, and deep integration—have become the new benchmarks for Enterprise Software Engineering. In a Cloud-Native ecosystem, Scalable Architecture is no longer a luxury; it is the primary metric of survival.

At OneCubeTechnologies, we view these high-performance architectures as the blueprint for future-proofing your operations. Leveraging our expertise in Legacy Modernization and advanced Cloud Architecture, we translate these enterprise-grade concepts into accessible strategies. Whether optimizing for Scalable Architecture or implementing sophisticated Business Automation, the goal remains the same: uncompromising operational resilience. The question is no longer just about uptime; it is about how automated your failover is and how efficiently your services communicate. The answers lie in understanding the machine that builds the machines.

The Physical Foundation: Redefining Datacenter Architecture #

The Physical Foundation: Redefining Datacenter Architecture

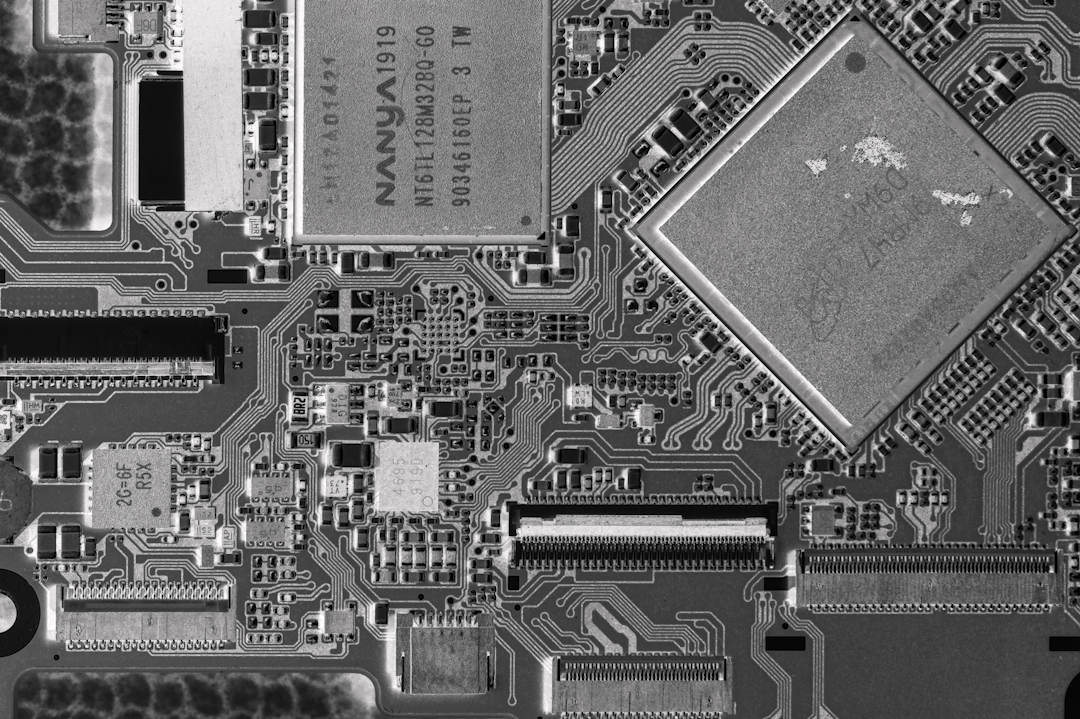

While the "cloud" is often conceptualized as ethereal and boundless, its reality is grounded in physical warehouses of silicon, copper, and fiber. These facilities constitute the bedrock of modern Cloud Architecture. For nearly two decades, data centers were engineered for general-purpose computing. However, the monolithic processing demands of Generative AI have exposed the limitations of these traditional designs, necessitating a fundamental rethinking of Enterprise Software Engineering. To build a machine capable of "infinite scale," Microsoft was forced to confront a formidable adversary: the laws of physics.

Verticality: Fighting the Speed of Light

The primary bottleneck in AI supercomputing is not merely processing speed, but latency—the transmission time required for data to travel between Graphic Processing Units (GPUs). Training a massive model requires thousands of GPUs to exchange data continuously. In a traditional, sprawling single-story warehouse, cable length alone introduces delays governed by the speed of light.

To mitigate this, Azure introduced the "Fairwater" datacenter architecture. Departing from standard warehouse-scale designs, Fairwater utilizes a two-story topology. By vertically stacking server racks, engineers drastically shorten the cable runs between them. This three-dimensional density tightens the physical loop, allowing the facility to fit greater compute power into a condensed footprint, ensuring thousands of GPUs function as a coherent, synchronized supercomputer.

Density: The Thermal Challenge

Concentrating highly potent silicon into a dense, two-story structure creates a significant thermodynamic challenge. The customized racks in a Fairwater facility are engineered for extreme density, drawing approximately 140 kW per rack, with row densities reaching 1.36 MW. For perspective, a standard enterprise server rack typically draws less than 10 kW. Attempting to manage this thermal output with traditional air conditioning is physically impossible; the heat density renders standard air cooling obsolete.

Azure’s solution is a facility-wide closed-loop liquid cooling system. Rather than blowing air over chips, cold plates circulate liquid directly against high-power components to extract heat with maximum efficiency. This design is high-performance and sustainable; because the system is closed, water does not evaporate. The initial water fill can circulate for over six years without requiring replacement.

The Fungible Fleet: Planet-Scale Unity

Perhaps the most strategic architectural shift is the concept of the "Fungible Fleet," a core tenet of this Legacy Modernization. In traditional cloud architectures, distinct data centers—for instance, one in Wisconsin and one in Atlanta—operated as isolated islands. Spilling a synchronous workload from one to the other was architecturally prohibitive.

Azure has dismantled this isolation. By connecting these high-density Fairwater sites via a high-bandwidth AI WAN (Wide Area Network), Microsoft treats geographically distributed facilities as a single, elastic pool of resources. This allows for dynamic workload allocation; if power is more cost-effective or capacity is higher in a different region, the system seamlessly routes the job there. For the end-user, the physical location of the hardware is abstracted, delivering a truly Cloud-Native, "planet-scale" computer that forms the foundation of a Scalable Architecture.

OneCube "Level Up" Tip for the .NET Architect:

Audit Latency in Your Cloud Architecture.You may not be training a trillion-parameter model, but the laws of physics still govern your application's performance.

- Action: For real-time data processing, a senior .NET Architect must ensure compute resources and data storage are co-located within the same Availability Zone or region to minimize latency.

- Strategy: Treat cloud regions as distinct performance tiers rather than interchangeable commodities. Analyzing and reducing the physical distance data travels is a fundamental principle for creating a performant, Scalable Architecture.

Enabling Scale: Custom Silicon and Next-Generation Networking #

Enabling Scale: Custom Silicon and Next-Generation Networking

A state-of-the-art facility creates the structural envelope, but the true velocity of an AI Superfactory is derived from its internal components. To train models with trillions of parameters, modern Cloud Architecture must resolve two fundamental constraints: transmitting data at the speed of thought and processing it with maximum efficiency. This necessitates a departure from off-the-shelf components toward a completely redesigned hardware stack—a core tenet of advanced Enterprise Software Engineering.

The Nervous System: Hollow Core Fiber

In traditional fiber optic cables, data travels as pulses of light through a solid core of glass (silica). While rapid, glass creates physical drag due to its refractive index. In high-performance computing, where milliseconds of delay compound into weeks of lost training time, this latency is unacceptable.

Azure’s solution is the deployment of Hollow Core Fiber (HCF). As the name implies, these cables feature a hollow center, allowing light to travel through air rather than glass. Physics dictates that light travels approximately 47% faster in air than in silica. This transition results in a 33% reduction in latency over long distances. By implementing HCF, Microsoft effectively upgrades the "nervous system" of its Superfactory, ensuring that signals between geographically distributed datacenters arrive with near-instantaneous synchronization.

The Flat Network Topology

Raw transmission speed is irrelevant if network congestion creates bottlenecks. Traditional datacenter networks often utilize multi-layered "Clos" topologies, where data must navigate through multiple switches to reach its destination. Each hop introduces latency and architectural complexity.

To support the massive bandwidth requirements of hundreds of thousands of GPUs, Azure implemented a "single flat network." Built on the open-source SONiC operating system, this Cloud-Native architecture removes complex switching layers, allowing GPUs to communicate directly over an Ethernet-based fabric with 800 Gbps connectivity. This transforms the cluster into a massive, unencumbered grid, providing the foundation for a truly Scalable Architecture by eliminating transmission bottlenecks.

Vertical Integration: The Move to Custom Silicon

Historically, cloud providers relied almost exclusively on third-party vendors like Intel, AMD, and NVIDIA. However, to achieve the specific efficiencies required for Generative AI, Microsoft has embraced vertical integration—designing proprietary chips tailored to their specific software stack. This strategic shift is a form of Legacy Modernization, reducing dependency on general-purpose hardware in favor of specialized engineering.

1. Azure Maia 100: The Muscle

The Maia 100 is an AI accelerator purpose-built for training Large Language Models (LLMs) like GPT-4. Fabricated on a 5nm process with 105 billion transistors, it is optimized for the low-precision data formats essential to AI workloads. Unlike generic GPUs, Maia is stripped of extraneous features and tuned for pure deep learning throughput. It utilizes custom "sidekick" racks that integrate directly with the facility’s liquid cooling loop, maximizing performance per watt.

2. Azure Cobalt 100: The Brain

While accelerators handle the AI computation, the system requires a Central Processing Unit (CPU) to manage data orchestration and general cloud workloads. Enter the Azure Cobalt 100. Based on the ARM architecture, this 128-core chip is engineered for efficiency, delivering a reported 40% performance improvement over previous generations of ARM servers. By deploying Cobalt to handle general processing, Azure frees up power and budget for high-intensity AI tasks.

OneCube "Level Up" Tip for the .NET Architect:

Optimize Your Cloud Spend with Specialized Silicon.You do not need to manufacture custom chips to benefit from hardware specialization.

- The Trap: Many engineering teams default to standard x86 (Intel/AMD) instances for all workloads out of habit, resulting in inefficient capital allocation.

- The Strategy: A savvy .NET Architect evaluates ARM-based instances (such as Azure Cobalt or AWS Graviton) for general-purpose applications, web servers, and microservices.

- The Payoff: ARM processors often provide superior price-performance ratios. Optimizing instance selection is one of the most effective methods to reduce cloud costs and build a more efficient, Scalable Architecture without rewriting code.

Orchestration and Strategy: Applying Azure's Principles to Your Business #

Introduction: The Paradigm Shift to AI Supercomputing

The rapid ascent of Generative AI has fundamentally altered not just user interfaces, but the physical reality of the cloud. For the past decade, enterprise software engineering prioritized microservices—decoupling applications into independent, portable components. However, training frontier AI models demands the inverse. It requires massive, monolithic synchronization where hundreds of thousands of processors function as a single, cohesive unit. We are witnessing a transition from the traditional data center model to what Microsoft Azure defines as the "AI Superfactory."

This shift represents the pinnacle of Legacy Modernization, redefining the core principles of modern Cloud Architecture. Azure has evolved beyond the concept of isolated server farms to a design where global infrastructure functions as a fungible pool of compute. By pushing the boundaries of physics—managing latency, heat, and data transmission speeds—Azure has engineered an infrastructure capable of near-infinite scale. This is not merely about adding capacity; it is an architectural revolution. It requires the tight integration of custom silicon, such as the Maia 100, with novel network topologies and automated orchestration to treat geographically distributed hardware as a unified, fluid resource.

For business owners and CTOs, this evolution presents a critical inflection point: Is your current infrastructure an accelerator for innovation, or is it accumulating technical debt? While few enterprises need to train trillion-parameter models, the principles driving the AI Superfactory—resilience, extreme automation, and deep integration—have become the new benchmarks for Enterprise Software Engineering. In a Cloud-Native ecosystem, Scalable Architecture is no longer a luxury; it is the primary metric of survival.

At OneCubeTechnologies, we view these high-performance architectures as the blueprint for future-proofing your operations. Leveraging our expertise in Legacy Modernization and advanced Cloud Architecture, we translate these enterprise-grade concepts into accessible strategies. Whether optimizing for Scalable Architecture or implementing sophisticated Business Automation, the goal remains the same: uncompromising operational resilience. The question is no longer just about uptime; it is about how automated your failover is and how efficiently your services communicate. The answers lie in understanding the machine that builds the machines.

The Physical Foundation: Redefining Datacenter Architecture

While the "cloud" is often conceptualized as ethereal and boundless, its reality is grounded in physical warehouses of silicon, copper, and fiber. These facilities constitute the bedrock of modern Cloud Architecture. For nearly two decades, data centers were engineered for general-purpose computing. However, the monolithic processing demands of Generative AI have exposed the limitations of these traditional designs, necessitating a fundamental rethinking of Enterprise Software Engineering. To build a machine capable of "infinite scale," Microsoft was forced to confront a formidable adversary: the laws of physics.

Verticality: Fighting the Speed of Light

The primary bottleneck in AI supercomputing is not merely processing speed, but latency—the transmission time required for data to travel between Graphic Processing Units (GPUs). Training a massive model requires thousands of GPUs to exchange data continuously. In a traditional, sprawling single-story warehouse, cable length alone introduces delays governed by the speed of light.

To mitigate this, Azure introduced the "Fairwater" datacenter architecture. Departing from standard warehouse-scale designs, Fairwater utilizes a two-story topology. By vertically stacking server racks, engineers drastically shorten the cable runs between them. This three-dimensional density tightens the physical loop, allowing the facility to fit greater compute power into a condensed footprint, ensuring thousands of GPUs function as a coherent, synchronized supercomputer.

Density: The Thermal Challenge

Concentrating highly potent silicon into a dense, two-story structure creates a significant thermodynamic challenge. The customized racks in a Fairwater facility are engineered for extreme density, drawing approximately 140 kW per rack, with row densities reaching 1.36 MW. For perspective, a standard enterprise server rack typically draws less than 10 kW. Attempting to manage this thermal output with traditional air conditioning is physically impossible; the heat density renders standard air cooling obsolete.

Azure’s solution is a facility-wide closed-loop liquid cooling system. Rather than blowing air over chips, cold plates circulate liquid directly against high-power components to extract heat with maximum efficiency. This design is high-performance and sustainable; because the system is closed, water does not evaporate. The initial water fill can circulate for over six years without requiring replacement.

The Fungible Fleet: Planet-Scale Unity

Perhaps the most strategic architectural shift is the concept of the "Fungible Fleet," a core tenet of this Legacy Modernization. In traditional cloud architectures, distinct data centers—for instance, one in Wisconsin and one in Atlanta—operated as isolated islands. Spilling a synchronous workload from one to the other was architecturally prohibitive.

Azure has dismantled this isolation. By connecting these high-density Fairwater sites via a high-bandwidth AI WAN (Wide Area Network), Microsoft treats geographically distributed facilities as a single, elastic pool of resources. This allows for dynamic workload allocation; if power is more cost-effective or capacity is higher in a different region, the system seamlessly routes the job there. For the end-user, the physical location of the hardware is abstracted, delivering a truly Cloud-Native, "planet-scale" computer that forms the foundation of a Scalable Architecture.

OneCube "Level Up" Tip for the .NET Architect:

Audit Latency in Your Cloud Architecture.You may not be training a trillion-parameter model, but the laws of physics still govern your application's performance.

- Action: For real-time data processing, a senior .NET Architect must ensure compute resources and data storage are co-located within the same Availability Zone or region to minimize latency.

- Strategy: Treat cloud regions as distinct performance tiers rather than interchangeable commodities. Analyzing and reducing the physical distance data travels is a fundamental principle for creating a performant, Scalable Architecture.

Enabling Scale: Custom Silicon and Next-Generation Networking

A state-of-the-art facility creates the structural envelope, but the true velocity of an AI Superfactory is derived from its internal components. To train models with trillions of parameters, modern Cloud Architecture must resolve two fundamental constraints: transmitting data at the speed of thought and processing it with maximum efficiency. This necessitates a departure from off-the-shelf components toward a completely redesigned hardware stack—a core tenet of advanced Enterprise Software Engineering.

The Nervous System: Hollow Core Fiber

In traditional fiber optic cables, data travels as pulses of light through a solid core of glass (silica). While rapid, glass creates physical drag due to its refractive index. In high-performance computing, where milliseconds of delay compound into weeks of lost training time, this latency is unacceptable.

Azure’s solution is the deployment of Hollow Core Fiber (HCF). As the name implies, these cables feature a hollow center, allowing light to travel through air rather than glass. Physics dictates that light travels approximately 47% faster in air than in silica. This transition results in a 33% reduction in latency over long distances. By implementing HCF, Microsoft effectively upgrades the "nervous system" of its Superfactory, ensuring that signals between geographically distributed datacenters arrive with near-instantaneous synchronization.

The Flat Network Topology

Raw transmission speed is irrelevant if network congestion creates bottlenecks. Traditional datacenter networks often utilize multi-layered "Clos" topologies, where data must navigate through multiple switches to reach its destination. Each hop introduces latency and architectural complexity.

To support the massive bandwidth requirements of hundreds of thousands of GPUs, Azure implemented a "single flat network." Built on the open-source SONiC operating system, this Cloud-Native architecture removes complex switching layers, allowing GPUs to communicate directly over an Ethernet-based fabric with 800 Gbps connectivity. This transforms the cluster into a massive, unencumbered grid, providing the foundation for a truly Scalable Architecture by eliminating transmission bottlenecks.

Vertical Integration: The Move to Custom Silicon

Historically, cloud providers relied almost exclusively on third-party vendors like Intel, AMD, and NVIDIA. However, to achieve the specific efficiencies required for Generative AI, Microsoft has embraced vertical integration—designing proprietary chips tailored to their specific software stack. This strategic shift is a form of Legacy Modernization, reducing dependency on general-purpose hardware in favor of specialized engineering.

1. Azure Maia 100: The Muscle

The Maia 100 is an AI accelerator purpose-built for training Large Language Models (LLMs) like GPT-4. Fabricated on a 5nm process with 105 billion transistors, it is optimized for the low-precision data formats essential to AI workloads. Unlike generic GPUs, Maia is stripped of extraneous features and tuned for pure deep learning throughput. It utilizes custom "sidekick" racks that integrate directly with the facility’s liquid cooling loop, maximizing performance per watt.

2. Azure Cobalt 100: The Brain

While accelerators handle the AI computation, the system requires a Central Processing Unit (CPU) to manage data orchestration and general cloud workloads. Enter the Azure Cobalt 100. Based on the ARM architecture, this 128-core chip is engineered for efficiency, delivering a reported 40% performance improvement over previous generations of ARM servers. By deploying Cobalt to handle general processing, Azure frees up power and budget for high-intensity AI tasks.

OneCube "Level Up" Tip for the .NET Architect:

Optimize Your Cloud Spend with Specialized Silicon.You do not need to manufacture custom chips to benefit from hardware specialization.

- The Trap: Many engineering teams default to standard x86 (Intel/AMD) instances for all workloads out of habit, resulting in inefficient capital allocation.

- The Strategy: A savvy .NET Architect evaluates ARM-based instances (such as Azure Cobalt or AWS Graviton) for general-purpose applications, web servers, and microservices.

- The Payoff: ARM processors often provide superior price-performance ratios. Optimizing instance selection is one of the most effective methods to reduce cloud costs and build a more efficient, Scalable Architecture without rewriting code.

Orchestration and Strategy: Applying Azure's Principles to Your Business

Possessing the fastest custom silicon and the most efficient cabling is meaningless if the governing software cannot manage the complexity. In a system involving hundreds of thousands of processors, hardware failure is not a possibility; it is a statistical certainty. This is where Project Forge enters the narrative, representing the pinnacle of Business Automation in cloud operations.

Project Forge acts as the orchestration layer—the "mind"—of the AI Superfactory. It resolves a critical operational challenge: How do you execute a cohesive training job on hardware that may fail, require maintenance, or be located across diverse time zones? The solution lies in virtualization and transparent checkpointing.

The Virtual Cluster: Abstracting Reality

Project Forge decouples the workload from the physical hardware. Instead of assigning a job to a specific physical location, the system creates a "virtual cluster." This software abstraction presents a clean, unified interface to the developer, while the scheduler manages the complexities of identifying available GPU capacity across the global fleet.

This creates a highly Scalable Architecture where the physical infrastructure is fungible—replaceable and interchangeable. If a specific server rack goes offline, the software seamlessly routes the computation to alternative healthy resources without user intervention or awareness.

Transparent Checkpointing: The Key to 95% Utilization

The most significant technical achievement of Project Forge is transparent checkpointing. Training a massive AI model can require months of computation. In traditional environments, a GPU failure on day 59 of a 60-day cycle could crash the entire job, resulting in significant financial loss.

Project Forge mitigates this risk by continuously saving the exact state of the job—including GPU memory contents and CPU registers—to durable storage. If a failure occurs, the system pauses, swaps out the compromised hardware for healthy nodes, and resumes the job from the exact millisecond of interruption.

This technology also drives extreme efficiency, allowing Azure to monetize "spare" capacity for low-priority jobs. If a high-priority customer requires those GPUs, the system instantaneously "freezes" the low-priority job, saves its state, and evicts it. Once capacity becomes available, the job resumes. This elasticity drives hardware utilization rates to over 95%, a figure previously unheard of in high-performance Cloud Architecture.

Strategic Takeaways for Your Enterprise

You may not be training a trillion-parameter model, but the architectural principles enabling the AI Superfactory are directly applicable to your Legacy Modernization initiatives.

1. Decouple Compute from StateAzure moves workloads globally because the "processing" (compute) is decoupled from the "memory" (storage).

- The Lesson: Ensure your applications are stateless, a fundamental tenet of Cloud-Native design. Avoid storing critical user session data on the web server itself. Utilize external services like Redis for caching or Azure SQL for databases. When an application is stateless, a server failure becomes a minor inconvenience rather than a catastrophe.

2. Design for FailureAzure assumes hardware will fail and engineers software that thrives despite it.

- The Lesson: Abandon the pursuit of "unbreakable" servers. Instead, build resilient software. This is the core discipline of modern Enterprise Software Engineering. Implement "circuit breaker" patterns that prevent a single failing component from crashing the entire system. Ensure long-running processes can resume from a checkpoint rather than restarting from zero.

OneCube "Level Up" Tip for the .NET Architect:

Embrace the "Cattle, Not Pets" Mentality for Legacy Modernization.In legacy IT, servers were treated as "pets"—given unique names and lovingly maintained.

- The Shift: Adopt the "cattle" mindset. Servers should be identical, disposable units within a fleet.

- Action: Utilize Infrastructure as Code (IaC) tools like Terraform or Bicep. This level of Business Automation is crucial for creating a maintainable, Scalable Architecture.

- The Goal: For the senior .NET Architect, the objective is simple: if you can delete a production server and your automated systems replace it without a customer noticing, you have achieved a truly Cloud-Native, resilient system.

Conclusion #

Conclusion: The New Standard for Cloud Engineering

Azure’s AI Superfactory represents more than a mere capacity upgrade; it constitutes a fundamental rewriting of the Cloud Architecture playbook. By synthesizing the structural innovations of the Fairwater architecture, the speed-of-light advantages of Hollow Core Fiber, and the specialized computation of Maia and Cobalt silicon, Microsoft has effectively dissolved the distinction between a single server and a planetary network. Yet, raw power is nothing without control. The orchestration capabilities of Project Forge demonstrate that a truly Scalable Architecture demands software engineered to embrace failure, not merely resist it.

For modern enterprises, the imperative is clear: the future belongs to those who architect for resilience. While few organizations require a supercomputer, the principles powering one—decoupling, extreme automation, and fungibility—are the new baseline for Enterprise Software Engineering. This transition from rigid, monolithic systems to dynamic, Cloud-Native models is the essence of successful Legacy Modernization. At OneCubeTechnologies, we view these innovations not as abstract concepts, but as the practical blueprint for your next engineering milestone. The cloud has evolved. It is time for your architecture to follow suit.

References #

html

Reference

- microsoft.com

- itnext.io

- convergedigest.com

- datacentremagazine.com

- tomshardware.com

- maginative.com

- semianalysis.com

- youtube.com

- itprotoday.com

- aicerts.ai

- stl.tech

- networkworld.com

- soton.ac.uk

- azuraconsultancy.com

- fiber-mart.com

- tweaktown.com

- techrepublic.com

- nand-research.com

- notebookcheck.net

- toolify.ai

- thenewstack.io

- techradar.com

- cioinfluence.com

- hpcwire.com

- computerweekly.com

- glennklockwood.com

- techinformed.com

- pythian.com

- techstrong.it